Venture Investors: Don't Get Duped By AI

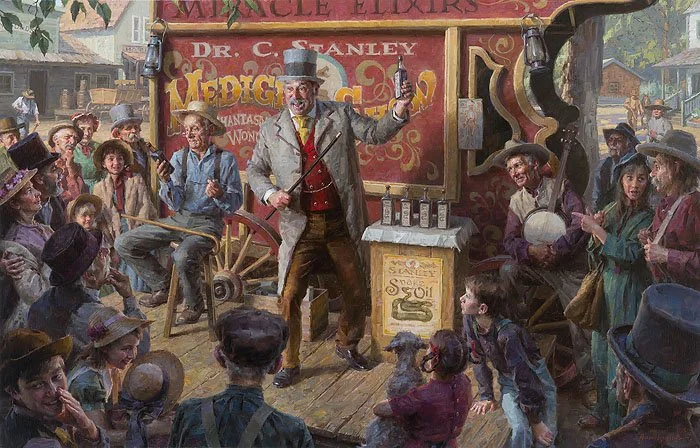

Let me set the scene: A roomful of investors watch a pitch where the founder confidently demonstrates an "AI-powered" solution. The product looks dialed-in, the team seems sharp, the burn rate is lean, the right buzzwords fly. The opportunity feels massive.

Six months later it's discovered that the demo was smoke and mirrors, not much more than an exceptional mock-up. Drilling in you learn that the solution you witnessed was vibe-coded using generative AI, and the founder hired a contractor to fix the obvious bugs AI couldn't figure out. The AI breaks in production more often than it works, and scaling requires burning through cash faster than a crypto mining farm.

Unfortunately, weak diligence in AI investments is rampant. Existing due diligence frameworks don't work well for AI, and most investors don't know enough about the technology to ask the right questions. I'm advising other investors to add three specific topics when looking at AI opportunities—basically, how deep is the company's understanding of AI-specific technology, go-to-market, and operational issues.

What's below breaks out those three general areas in a fair amount of detail. AI can be really tough to evaluate because it's still largely arcane knowledge; can make a lesser startup appear innovative, competent, and lean; and let's be honest, there's just a lot of greed-and-FOMO-driven desire to get some AI into the old portfolio. So this article is fairly heavy on the detail, because honestly a lot of people could use it.

So whether you're looking at an AI pure-play or a startup with AI core to the product—logistics optimization, fraud detection, manufacturing quality control, whatever—here are some diligence topics that will help you separate real feats of engineering from convincing vaporware.

Before We Continue: A few investors asked for something to help them develop a diligence checklist. Here you go.

1. Verify There's Actual Technical Depth

The first red flag: founders who can't explain their technical approach without buzzwords. Real AI engineering involves hard trade-offs and specific architectural decisions. It's not "we have an agentic bla bla bla" with no rationale or deeper detail. Here are some surface-level questions and tactics that will quickly reveal a lack of technical depth:

Insist that the technical brain trust attend the initial pitch. Insist on seeing and talking to the CTO, lead architect, or whoever built the core system. Have your technical advisor in the room or on a web conference to question and evaluate. Generative AI coding tools have made it trivially easy to create impressive demos and working prototypes without deep technical understanding. Someone can use Claude Code or GitHub Copilot to build a functional AI application while having zero understanding of the underlying mathematics, scaling challenges, or system design principles.

If the company wants to push this off—red flag. They may not actually have a technical leader and are looking for lead time. I've seen several cases, more on the angel-investing side, where founders had no technical partner but convinced a technical advisor (once it was just a founder's housemate) to represent them as "the CTO".

Request a technical architecture walkthrough. This is the most important and intensive technical diligence topic, so there's a lot here. Ask technical team members to explain their architectural decisions, not just describe what they built. Real teams will have strong opinions and solid documentation regarding framework choices, infrastructure requirements, and scaling bottlenecks. If the technical leads can't engage in substantive discussions about these choices without referring to documentation, you're looking at a team that's assembling solutions rather than engineering them.

Here's a neat red-flag for the technical folks: I've observed that fakers using generative AI coding tools to "build" what they call a "solution" almost always generate a Python monolith.

Again, this is a place where having a solid technical diligence advisor pays dividends in assessing technical depth with hard questions about the AI components themselves, and of course the rest of the application (good AI dropped into bad application does not a good product make).

Here's a key question that tees up deeper diligence: ask where technical design decisions are tracked—Confluence? Slack? How about QA testing strategies for the traditional parts of the app? Bug tracking and L3 issue resolution? Note their answers, and require artifact reviews if you make it to deep diligence. But beware window dressing—fake artifacts are also an area where AI can produce some compelling fluff. Use a technical diligence advisor who can suss out the difference.

Sometimes the company will be strongly opposed—sometimes violently opposed—to showing you technical details, usually citing concerns about protecting their highly-innovative-world-changing-super-secret-sauce. If they're not even willing to consider it under NDA or in a supervised setting, it's a red flag.

Any founding team who has created something truly special and is confident in their competitive moat will be falling over themselves to show it off—hell, you usually can't shut them up about it. So if the company balks at showing you technical details, it's likely they (1) have no defensible differentiation, (2) no actual innovation, (3) don't understand how investment works so will be difficult to deal with, or (4) are straight-up hustling you.

Ask to see their model evaluation metrics over time. It's very common for the snake-oil dealers of AI startups to know nothing about model performance. These folks don't actually understand model selection, post-training, model drift, and think Hugging Face is just an emoticon. Ask them to show you reports on model testing—and not just accuracy on a test set, but performance in production with real data. If they can't show you degradation curves, error analysis, or even basic monitoring dashboards, you're looking at AI theater.

Probe their worst failures. This is an easy one. Every real AI system has failed spectacularly at some point. If they can't tell you—in technical detail—about edge cases that broke their models or production incidents they've had to debug (operative term: production), they either haven't built anything real or they're flat-out lying.

2. Double-Click On Data and Training

Data is the actual moat in most AI businesses, not the model architecture. But data quality and provenance are where most startups cut corners. Also, data and training are points where most AI bullshit artists get tripped up. Here are the kinds of topics you want to be drilling in on:

How are they sourcing and labeling training data? If they're relying on public datasets or mechanical turk-style labeling, their competitive advantage is probably minimal. Strong companies have developed proprietary data collection methods or have unique access to high-quality datasets.

What post-training techniques are they using? Fine-tuning, SFT, DPO, RLHF, RAG, prompt engineering—there are lots of options, and each serves different purposes and has different cost/quality trade-offs. If they can't explain why they chose their specific approach or what alternatives they considered, that's concerning.

Are they treating training data as a strategic asset? This means both operational processes (data versioning, quality control, privacy protection) and legal frameworks (data ownership rights, retention policies, compliance requirements). Companies that haven't thought through data governance will hit scaling walls.

Note: I didn't go into a ton of detail here since it's a fairly specific technical area. You'll either have to spend time learning about it on your own (too much for a blog post) or engage a technical advisor to assess.

3. Compute Economics and Vendor Dependencies

AI has a dirty secret hiding in plain sight: inference costs can scale faster than revenue, especially for generative AI applications, and double-especially for poorly designed AI systems. Many founders either don't understand this or hope you won't ask.

What are their actual unit economics? Not projected costs based on list prices, but real per-transaction or per-user costs including compute, storage, and API calls. How do these change as they scale? Many AI startups are just excited about what they made and discover too late that their cost structure makes profitability impossible. And if they argue that profitability doesn't matter because they plan a quick exit based on their IP alone—run.

How dependent are they on specific AI providers? If they're built entirely on OpenAI's API and GPT-4 pricing doubles tomorrow, what happens? Strong teams have evaluated alternatives, understand the technical effort required to switch, and have contingency plans. An early topic in the cloud world was vendor lock-in, and the same concerns apply in this new realm. Complete dependence on a single vendor who decides to increase rates, focus on a different market, or just goes under can kill an AI business instantly.

Do they understand their scaling assumptions? Moving from 1,000 to 100,000 users isn't just about buying more GPU time or moving to a "better model". It requires model optimization, caching strategies, underlying architectural scalability (e.g. monolith vs microservices) and potentially architectural changes. Have they modeled these transitions? How will they fund scaling? How does this impact gross and net margins, both during the transitional periods and beyond? I know a lot about high-scale technology solutions, and believe me, the cost of getting over a scalability plateau can be crippling.

4. Model Performance and Drift Monitoring

This is a whopper, because it's a risk that isn't an "if" situation—it's a "when" situation. Even though it will definitely destroy customer renewals and company reputation, it's often not understood, overlooked, or kicked down the road.

AI models degrade over time as real-world data diverges from training data. This isn't theoretical—it's inevitable. How companies handle model drift separates the sophisticated from the naive. Companies that don't understand this are doomed to be fighting customer retention battles until they get a handle on model performance and drift.

How do they detect performance degradation? Real-time monitoring, A/B testing against baseline models, or customer feedback loops? Do they have alerting when model performance drops below acceptable thresholds?

What's their retraining strategy? How often do they retrain models, what triggers retraining decisions, and what does that cost in both compute and engineering time? Companies without systematic retraining processes will see their products slowly become less useful.

Do they have fallback mechanisms? When AI components fail—and they will—what happens? Rule-based backups, human-in-the-loop processes, or graceful degradation? The answer reveals how seriously they've thought about operational reliability.

What about customer expectations? In any commercial enterprise, there are going to be problems and companies have to be able to manage customer communications and expectations. For an emerging market driven by an emerging technology, this is amplified 10x because expectations are high, usually unrealistic, and understanding of the technology is lacking. Any hope of decent customer retention & renewal numbers absolutely demands educating customers about model drift and communicating brilliantly through rough patches. The best companies will actually make customers into assets in detecting and managing drift.

5. Business Continuity and Liability

AI failures can destroy businesses overnight. Smart investors want to know how companies plan for and mitigate these risks.

What happens if their primary AI provider shuts down or restricts access? This isn't hypothetical—I've seen API changes break applications and geopolitical tensions affect AI service availability. Do they have technical and business continuity plans?

How are they handling liability for AI mistakes? If their fraud detection system flags legitimate transactions or their quality control AI misses defects, who's responsible? How are they structuring contracts and insurance to protect themselves and their customers?

Have they stress-tested their legal frameworks? AI regulation is evolving rapidly. Do they understand compliance requirements in their target markets? Are their data handling practices defensible if regulations change?

6. Data Privacy and Security

For companies using large language models as a service (LLMaaS is what I'm calling it, so there), customer data often gets sent to third-party providers. This creates privacy and competitive risks many startups haven't considered and dramatically underestimate in financial planning. SOC 2 audits can cost $50K+ annually, require dedicated personnel, and take months to implement properly. HIPAA compliance can require complete infrastructure redesign. Ask to see their compliance roadmap and budget projections—if they think this is just a "legal checkbox" they'll handle later, they're setting up for expensive surprises when enterprise customers start demanding attestations.

How are they protecting customer data in API calls? Are they stripping sensitive information, using data anonymization, or restricting what data gets sent to external AI services? Do they understand the privacy implications of their architecture?

What do their customer contracts say about data handling? If they're sending customer data to OpenAI or Anthropic, have they passed through appropriate privacy protections and liability limitations to their own customers?

Do they have technical measures for data protection? On-premise deployment options, data encryption, audit trails for data access? Enterprise customers increasingly require these capabilities.

What security and compliance standards are they actually subject to, and do they understand the costs? Depending on their customer base and data types, they may need SOC 2, ISO 27001, HIPAA, PCI DSS, or emerging AI-specific frameworks like NIST's AI Risk Management Framework. Have they identified which standards apply to their business model, and more importantly, do they understand what compliance actually costs?

The compliance cost blindness is a huge one—I've seen too many startups budget $10K for "security stuff" when SOC 2 alone can cost 5x that annually, plus the engineering time to actually implement the controls. The AI-specific standards angle is particularly relevant since regulatory frameworks are still evolving. Companies that wait until customers demand attestations often discover they need to rebuild significant portions of their infrastructure, which can be existential for early-stage companies.

7. Commercial Deployment Reality

The gap between demo and production deployment is where most AI startups hit their first scaling wall. Smart diligence focuses on real customer deployment experiences. This is another one that’s very similar to other diligence cycles, so I will keep it brief.

What friction are they encountering in sales cycles? AI creates new categories of customer concerns: data privacy, integration complexity, training requirements. Have they had enough real sales cycles to understand these patterns?

How much customer training and ongoing support is required? AI products often require customers to change workflows or develop new competencies. Is this documented and budgeted for? Do they understand the total cost of customer success?

Can they show customer deployment timelines and success rates? Moving from POC to production often takes 3-6x longer than founders expect. What does their track record look like?

The Bottom Line

Well, I hope this helps prevent some otherwise fine investors from getting burned.

Remember that AI investment diligence isn't about becoming a technical expert. It's about asking the right questions to separate companies that have solved hard technical and operational problems from those that have built impressive demos on top of someone else's AI, using someone else's AI tools.

The strongest AI companies treat these challenges as competitive advantages rather than necessary evils. They've invested in data infrastructure, built monitoring and reliability systems, and developed deep expertise in their specific AI applications. These investments aren't visible in demos, but they're what separate sustainable businesses from expensive failures.

Next time you see an AI pitch, skip the feature demo and ask about their worst production failure instead. Have your technical diligence advisor hit them with some of the tough questions above. Plumb the depths of their knowledge about the business of AI, not just the technology of AI. The quality of their answers will tell you much of what you need to know.

And if you need technical diligence advisory support, get in touch. I'm usually available for pitch or diligence meetings with relatively short notice (I try to keep Mondays open just for this).

Think you may have invested in a stinker and want to see if it can be turned around? Also get in touch to see if my taking a look makes sense. If the startup’s domain or technology is not in my wheelhouse, I probably know someone who can help.

Happy investing!